Like many people, I’ve been entertaining myself at home by baking a ton and talking about my sourdough starter as if it were a real person. I’m pretty good at following recipes, but I decided I wanted to take things one step further and understand the science behind what differentiates a cake from a bread or a cookie. I also like machine learning so I thought: what if I could combine it with baking??!

I’ll start by explaining why baking presents an interesting ML problem. Then I’ll show you how I collected my own dataset, trained a simple model, deployed it, built a little app to get predictions, and used the model to invent a new recipe. If you’re only here for the recipe, I suppose you can skip ahead.

Why would you use machine learning for baking?

Maybe you’re thinking, couldn’t you just read a book or even a blog post that explains the sugar, fat, and flour ratios that make up different baked goods? Sure, I could do that. But my attention span has been pretty short these days, and that approach doesn’t really scale. If I learn enough to start inventing my own recipes, that only directly benefits the people who can eat them, which is currently not many.

Now maybe you’re thinking, couldn’t we solve this with traditional programming? For example, if the typical flour:liquid ratio for bread is 5:3, I could write a program like this:

ratio = flour_amt / water_amt

if ratio > 1.5 and ratio < 2:

print("It's bread!")That kind of works, but it’ll quickly get unwieldy. What if the flour:water ratio is close, but not exactly 5:3? What if I want to predict more than just bread? And what if I don’t feel like converting ingredient amounts into ratios? I am lazy (in certain ways) and I want to input my ingredient amounts without doing any math, and then have something magically tell me what it thinks it is.

Enter 🌟 machine learning 🌟

This is a great fit for ML because I can gather recipe data and train a model to identify patterns in that data. Since a lot of people have written about baking ratios, I’m going to assume there are some high-level patterns about them that I can teach a model to learn.

Hopefully by now I’ve convinced you that this will be a fun problem to solve with machine learning. But maybe you’re still skeptical, and you’re wondering: what’s the point of this? Don’t you already know what you’re baking when you follow a recipe? It seems silly to have a model tell you what you already know. All of that is true if I simply input an existing recipe into my model and ask it for a prediction. Remember, though, that the whole reason I’m doing this is to learn things like what makes a cake a cake, and not a cookie. Then I can experiment with different ratios to create a new recipe. Maybe I want to invent something that my model thinks is 50% cake, 50% cookie.

Creating the dataset

I couldn’t find an existing dataset of basic baking ingredients, so I decided to create my own very small one. Luckily there is no shortage of recipes on the internet, and I found 33 recipes each of breads, cakes, and cookies to make a dataset with 99 total recipes. To keep this problem relatively simple, I looked only at the following ingredients:

- Flour: If a recipe called for different types of flour, I counted it it all towards the flour amount

- Sugar: I also combined different sugars (granulated, brown, etc.) as one amount

- Sourdough starter: If you know, you know

- Salt

- Yeast

- Milk

- Water

- Oil

- Eggs

- Baking powder

- Baking soda

- Butter

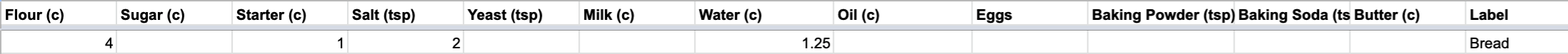

I tracked it all in a spreadsheet that looks like this:

I know, I’m using cups and teaspoons and not something more universal like grams. If my little model becomes a smash hit, I will try to add metric system support.

While collecting the data, I did my best to include various types of breads, cakes, and cookies so that the model could handle a diverse repertoire of baked goods.

Processing data

I went into this thinking I could feed my ingredient inputs directly into my machine model, since ML model inputs need to be numeric. Not quite. There are a few problems with the data in its current form.

First, my spreadsheet has a mix of units: I’m using cups for some ingredients, teaspoons for others, and eggs are just eggs. I don’t want my model to think that 1 unit of flour is the same as 1 unit of yeast (can you imagine what would happen there?). Since the majority of my data is in cups, I decided to convert everything else to cups. For teaspoons, this was fairly straightforward since 1 teaspoon is around .02 cups. For eggs, after some research I settled on 1 egg being approximately .2 cups. It’s not perfect, but now everything is in the same units.

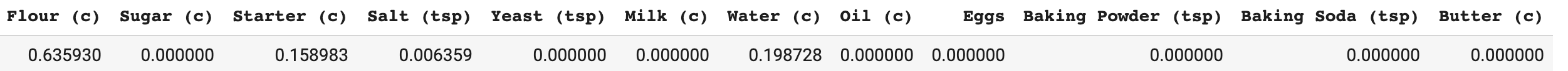

Next I needed a way to scale the data. Some recipes make 2 giant cakes, while others make a small loaf of bread. Converting all ingredient amounts to the same scale will ensure my model doesn’t give more weight to bigger recipes. I decided to scale amounts by converting each ingredient to a percentage of the total recipe. To do this I took the sum of each row, and then divided each ingredient by the sum to get its percentage. The bread recipe in the screenshot above becomes:

With my 99 recipes all converted to the same unit and scaled to percentages, it’s time to build and train a model.

Building a TensorFlow model

I’m going to use TensorFlow’s Keras API for this model, which makes the model code pretty short:

model = tf.keras.Sequential([

tf.keras.layers.Dense(16, input_shape=(num_ingredients,)),

tf.keras.layers.Dense(16, activation='relu'),

tf.keras.layers.Dense(3, activation='softmax')

])My model output is a softmax array, which means it’ll output the probability a particular recipe is a bread, cake, or cookie (in that order). Softmax means that all the probabilities will add to 1. So a 97% confident bread prediction would look like the following:

[.97, .02, .01]After training, my model has reached 90% accuracy. Pretty cool! But can I trust it, or is it overfitting the training data?

🚨This is definitely not an ML best practice, but I will be testing my model on real-world, production data. Mostly because I got tired of copying enough recipes into my spreadsheet to make a test set. Do not try this at home 🚨

To test the model, I made up the following recipe:

- Flour: 1 c

- Sugar: 1 c

- Salt: ½ tsp

- Eggs: 1

- Butter: 1 c

The model predicts cookies with 84% confidence. Sounds reasonable. What about an edge case, like a bread without yeast or sourdough starter? Let’s try:

- Flour: 4 c

- Salt: ½ tsp

- Water: 1.2 c

- Oil: 3 tsp

- Baking powder: 2 tsp

My model says bread with 99% confidence, nice! If I instead wrote a series of if statements to handle that edge case, you can see how it would quickly get very long.

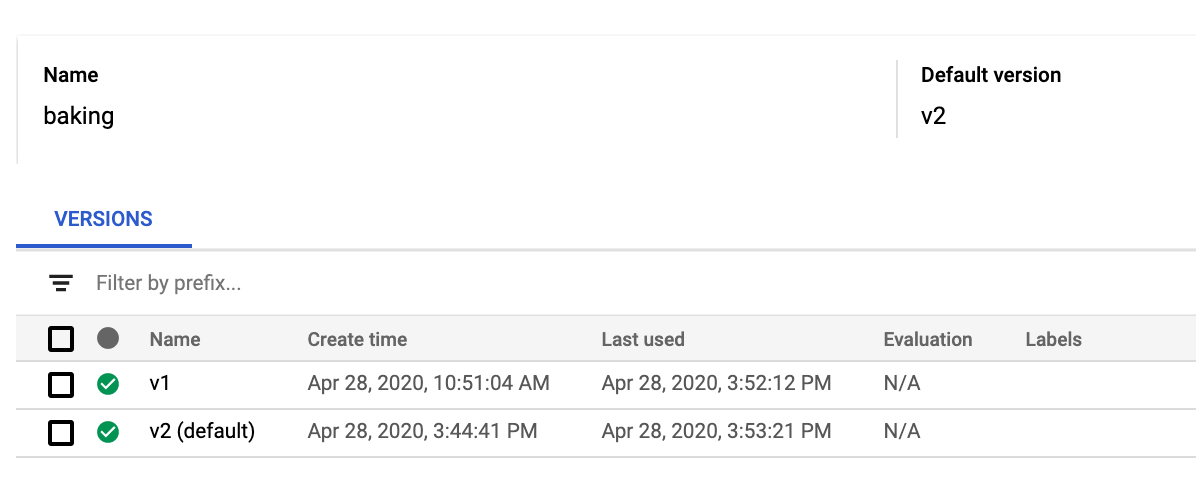

Deploying the model to AI Platform

I don’t want to be the only one who gets to use this model, so it’s time to deploy it. I’m going to deploy it to Google Cloud AI Platform Prediction. In order to do that, I need to use the TensorFlow model.save() method to save my model assets to a Cloud Storage Bucket. Then I can use gcloud to deploy my model from the command line, pointing it at the bucket path of my saved model.

When you save your TensorFlow model, you can pass it a local filepath or a Cloud Storage bucket. Here I’ll pass it my GCS bucket directly:

model.save('gs://my_gcs_bucket/path')With that, I’m ready to deploy using gcloud (you can also deploy via the UI or the API):

!gcloud ai-platform versions create 'v1' \

--model 'baking' \

--origin 'gs://path/to/saved/model' \

--runtime-version 2.1 \

--framework TENSORFLOW \

--python-version 3.7When your model has deployed, you’ll see it in your cloud console like this:

Building a web app

🙏 Shout out to my teammate David for his help on the web app 🙏

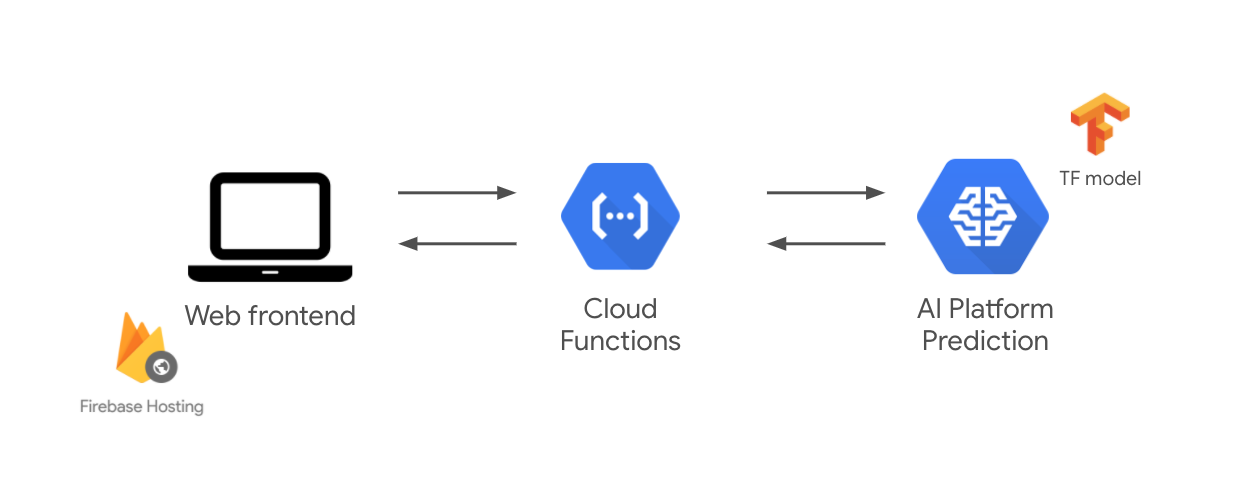

It would be fun if I had a basic web app to get predictions on my model. That way I could quickly experiment with different ingredient ratios. Remember that my model needs the ingredient inputs all converted to cups and then scaled as percentages of the total recipe. It would be mean if I made people do those calculations on their own, so this should probably be handled server side – a great use for Cloud Functions:

I’ll use the Python runtime and write a function that takes the ingredient inputs in their user-friendly units and converts them to cups and then to percentages. From the same function I can send that scaled input to my model and return a nice, readable prediction to the web app. A snippet of the function is below, and you can find it all in this gist.

def get_prediction(request):

data = request.get_json()

prescaled = dict(zip(columns, data))

scaled = scale_data(prescaled)

# Send scaled inputs to the model

prediction = predict_json('gcp-project-name', 'baking', scaled)

# Get the item with the highest confidence prediction

predicted_ind = np.argmax(prediction)

label_map = ['Bread', 'Cake', 'Cookies']

baked_prediction = label_map[predicted_ind]

confidence = str(round(prediction[predicted_ind] * 100))

if baked_prediction == 'Bread':

emoji = "It's bread! 🍞"

elif baked_prediction == 'Cake':

emoji = "It's cake! 🧁"

elif baked_prediction == 'Cookies':

emoji = "It's cookies! 🍪"

return "{} {}% confidence".format(emoji, confidence)And here’s the app! I will preface this by saying that I’m lucky to work with amazingly talented people. I tweeted an early version of this web app and David volunteered to make it look, well, a lot better:

If you want to play around with it yourself, it’s here. I’m sure you’ll try to do something weird with it (this is the internet after all), just keep in mind that the model has been trained on minimal data and it only knows about 3 things: bread, cake, and cookies. So if you enter the ingredients for a brownie or anything else, it’ll do its best to slot it into the only 3 categories it knows about. Think of it like a baby who only knows 3 words. For example, if I enter 10,000 eggs and nothing else, the model predicts 96% cake. Such is the beauty of machine learning. It’s garbage in, garbage out.

Experiment time: let’s bake a…cakie

The whole point of my experiment was to see if I could understand ingredient ratios enough to start inventing my own recipes. I played around with my model and found a combination of ingredients that caused it to predict 50% chance of cake, 50% chance of cookie. I wondered…what would happen if I actually made it? So I did. Here’s the result:

It is yummy. And it strangely tastes like what I’d imagine would happen if I told a machine to make a cake cookie hybrid. My fiancé agrees.

I’ve never made my own recipe before and while I wish you could taste it, you can have the next best thing - the recipe! I added two ingredients for some extra flavor that aren’t part of my model inputs (vanilla extract and chocolate chips), but the rest adhered strictly to what my model told me would be 50% cake, 50% cookie. I present to you the cakie.

Cakie

Ingredients

- ½ cup + ¾ tablespoon butter, cold

- ¼ cup granulated sugar

- ¼ cup brown sugar, packed

- 1 large egg

- ¼ cup olive oil

- ⅛ teaspoon vanilla extract

- 1 cup flour

- 1 teaspoon baking powder

- ¼ teaspoon salt

- ¼ cup chocolate chips

Preheat oven to 350 degrees F. Lightly grease a 6-inch cake pan with cooking spray or butter. If you don’t have a 6-inch cake pan, a loaf pan will probably work too (let me know if you try this!).

Cut the butter into pieces and place into a mixer. On medium speed, mix the butter until smooth. Add in both sugars and mix until combined. Then add the egg, oil, and vanilla extract.

In a separate bowl, whisk the flour, baking powder, and salt. Slowly incorporate it into the butter mixture, mixing on low speed until just combined. Mix in chocolate chips if you like them.

Bake for 25 minutes or until a toothpick inserted in the center comes out clean. Unlike I did, let the cakie cool in the pan for at least 20 minutes before removing it.

Mixing it all together

This was a fun ML experiment that resulted in a new dessert. I started by compiling a tiny dataset of 99 recipes and using that to train a TensorFlow model to predict whether something is a bread, cake, or cookie based on the ingredients. Then I deployed it to AI Platform Prediction, and built a web app that calls the model via a Python Cloud Function. Finally, I used the deployed model to invent a new dessert which shall forever be known as the cakie. Here are some useful links:

- What are you baking?

- TensorFlow

- AI Platform Prediction

- Cloud Functions

- Python code for my Cloud Function

I’d love to hear what you thought of this post, whether you were in it for the ML or the recipe 😋 Find me on Twitter at @SRobTweets.